Local Host Cache

This article provides a complete overview of the Local Host Cache (LHC) feature, focusing on its ability to maintain business continuity. It explains the functionality of LHC both during normal working operations and when LHC mode activates. Additionally, the article offers guidance on configuration checks, troubleshooting, PowerShell commands for LHC management, and event log monitoring for LHC health.

Overview

LHC maintains end user access to apps and desktops when Cloud Connectors lose connectivity with Citrix Cloud™ due to a network disruption or an issue with Citrix Cloud. Active sessions are not impacted when LHC mode activates and new sessions are brokered through the LHC broker running on the Cloud Connectors.

LHC is enabled by default, but it is important to ensure that your environment is configured properly for LHC. While misconfigurations might not disrupt normal brokering, they can hinder LHC performance causing disruption to end users when LHC mode is active. To ensure optimal LHC functionality, check your Zones node in Studio for zone-related errors and warnings that Citrix has detected. Additionally, review the Check resiliency configurations guide and the Avoid Common Misconfigurations that Can Negatively Impact DaaS Resiliency article for a comprehensive LHC configuration checklist.

Important:

LHC only engages if Cloud Connectors receive traffic from StoreFront or if Service Continuity is enabled. For more information on how Service Continuity can use Local Host Cache to maintain user access to apps and desktops during service disruptions, see Service continuity.

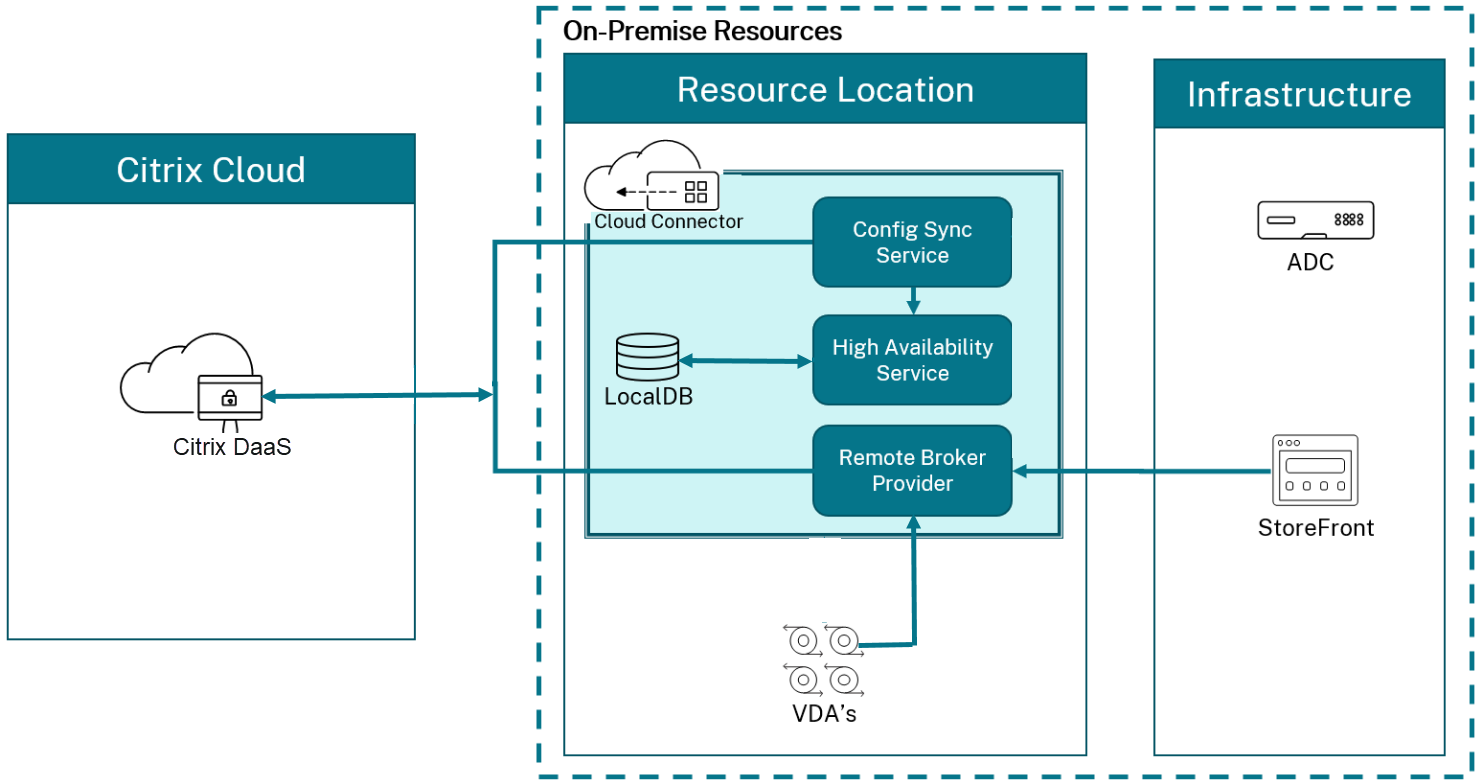

How it works

During normal operations, the Remote Broker Provider Service on a Cloud Connector communicates with Citrix Cloud for all brokering operations. The Citrix Config Synchronizer Service (CSS) regularly checks for configuration changes in Citrix Cloud and synchronizes this data to the LHC broker on the Cloud Connector. If Cloud Connectors lose connectivity to Citrix Cloud or Citrix Cloud is experiencing an issue, then LHC mode activates and ensures continued access to applications and desktops.

During normal brokering operation

- The Citrix Remote Broker Provider Service on a Cloud Connector accepts connection requests from StoreFront. The Remote Broker Provider Service communicates with Citrix Cloud to connect users with VDAs that are registered with Citrix Cloud.

- The CSS checks with the Cloud broker in Citrix Cloud approximately every 5 minutes to see if any configuration changes were made. Those changes can be administrator-initiated (such as changing a delivery group property) or system actions (such as machine assignments).

-

If a configuration change occurred since the previous check, CSS synchronizes information to the LHC broker on the Cloud Connector. (The LHC broker is also referred to as the High Availability Service, or HA broker).

All configuration data is copied, not just items that changed since the previous check. The CSS imports the configuration data into a Microsoft SQL Server Express LocalDB database on the Cloud Connector. This database is referred to as the Local Host Cache database. The CSS ensures that the information in the Local Host Cache database matches the information in the site database in Citrix Cloud.

Microsoft SQL Server Express LocalDB (used by the LHC database) is installed automatically when you install a Cloud Connector. The LHC database cannot be shared across Cloud Connectors. You do not need to back up the LHC database. It is recreated every time a configuration change is detected.

- If no changes occurred since the last check, the configuration data is not copied.

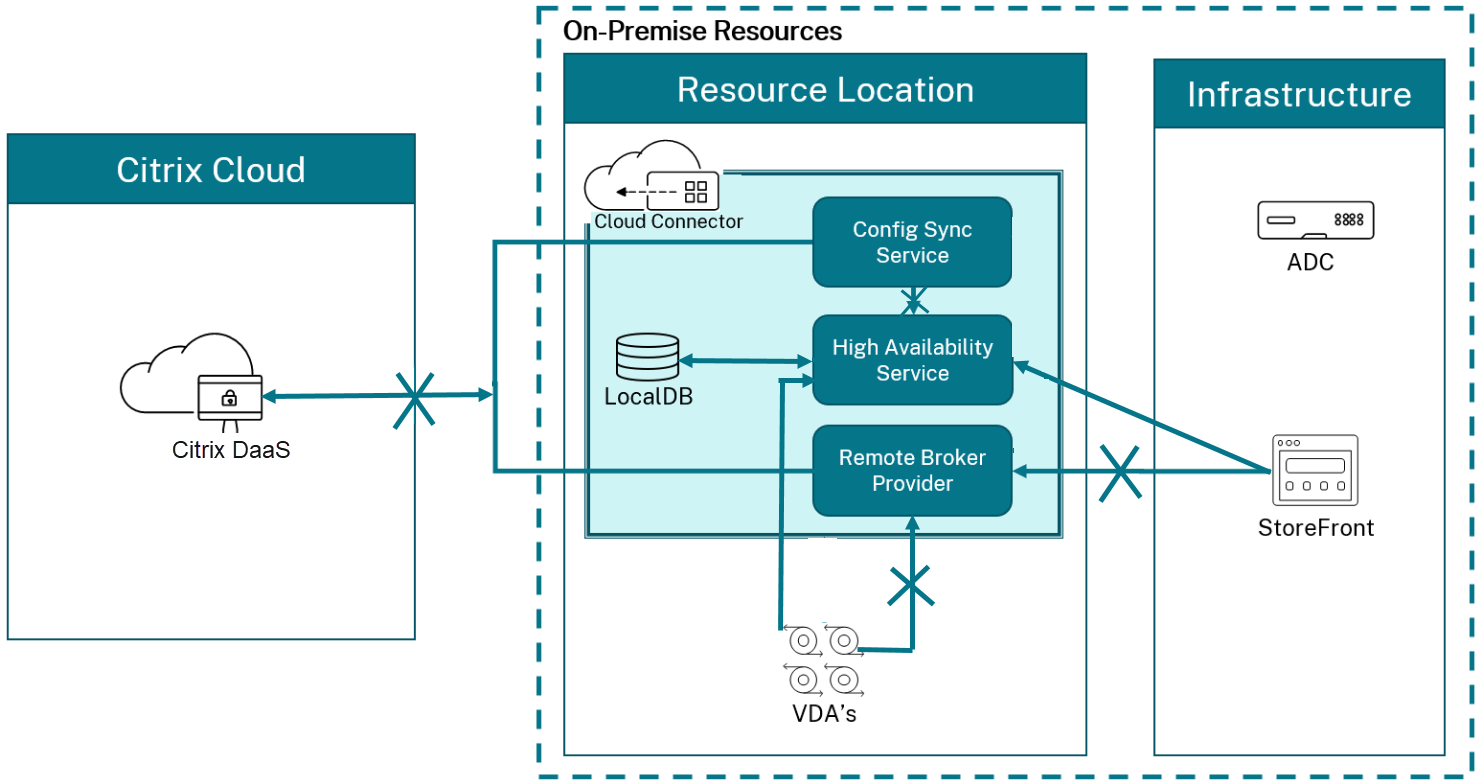

When LHC mode becomes active

- The LHC broker starts listening for connection information and processing connection requests.

- When the Cloud Connectors first lose connectivity to Citrix Cloud, the LHC broker does not have current VDA registration data, but when a VDA communicates with it, a registration process is triggered. During that process, the LHC broker also gets current session information for that VDA.

- While the LHC broker is handling connections, the Remote Broker Provider Service continues to monitor the connection to Citrix Cloud. When the connection is restored, the Remote Broker Provider Service instructs the LHC broker to stop listening for connection information, and Citrix Cloud resumes brokering operations. The next time a VDA communicates with Citrix Cloud through the Remote Broker Provider Service, a registration process is triggered. The LHC broker removes any remaining VDA registrations from when LHC mode was active. The CSS resumes synchronizing information when it learns that configuration changes have occurred in Citrix Cloud.

In the unlikely event that LHC begins during a synchronization, the current import is discarded and the last known configuration is used.

The event log indicates when synchronizations occur and LHC mode activates.

There is no time limit imposed for operating in LHC mode.

You can also manually trigger LHC mode. See Force LHC mode for details about why and how to do this.

Zones with multiple Cloud Connectors

Among other tasks, the CSS routinely provides the LHC broker with information about all Cloud Connectors in the zone. Having that information, each LHC broker knows about all peer LHC brokers running on other Cloud Connectors in the zone.

The LHC brokers communicate with each other on a separate channel. Those brokers use an alphabetical list of FQDN names of the machines that they’re running on to determine (elect) which LHC broker will broker operations in the zone if the zone enters LHC mode. During LHC mode, all VDAs re-register with the elected LHC broker. The non-elected LHC brokers in the zone actively reject incoming connection and VDA registration requests.

Important:

Connectors within a zone must be able to reach each other at

http://<FQDN_OF_PEER_CONNECTOR>:80/Citrix/CdsController/ISecondaryBrokerElection. If Connectors cannot communicate at this address, multiple brokers might be elected and launch failures occur when in LHC mode.

During LHC mode, if a Cloud Connector is restarted or the LHC broker fails:

- If that Cloud Connector is not the elected LHC broker, the restart has no impact.

- If that Cloud Connector is the elected LHC broker, the LHC broker on the next Cloud Connector is elected, causing VDAs to register. Election order is based on the alphabetical order of the FQDN of Cloud Connectors. Once the LHC broker becomes active again, LHC operations continue on the first connector in alphabetical order, which can cause VDAs to register again. In this scenario, performance can be affected during the registrations.

See event logs for more information regarding events pertaining to LHC broker elections.

Local Host Cache data content

The LHC database includes the following data, which is a subset of the data in the main database:

- Identities of users and groups who are assigned rights to resources published from the site.

- Identities of users who are currently using, or who have recently used, published resources from the site.

- Identities of VDA machines (including Remote PC Access machines) configured in the site.

- Identities (names and IP addresses) of client Citrix Workspace™ app machines being actively used to connect to published resources.

It also contains the following information for currently active connections that were established while in LHC mode:

- Results of any client machine endpoint analysis performed by Citrix Workspace app.

- Identities of infrastructure machines (such as Citrix Gateway and StoreFront servers) involved with the site.

- Dates, times, and types of recent activity by users

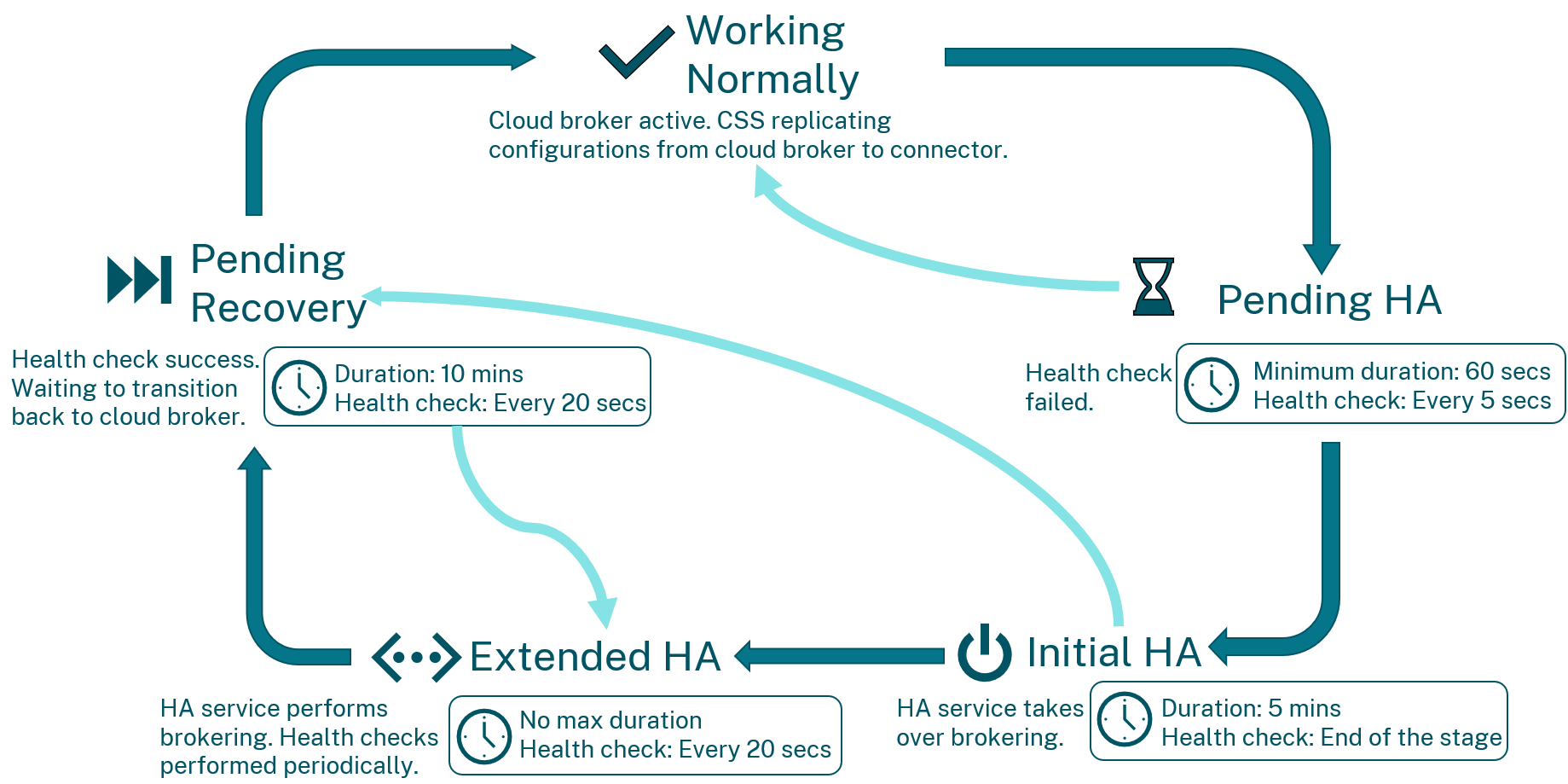

Local Host Cache states

There are several states during the entire cycle of entering and exiting LHC mode. The following diagram describes the states for entering and exiting LHC mode.

- During the Working Normally state, all components are healthy and all brokering transactions are handled by the Cloud broker. - - The CSS is actively replicating the configurations from the Cloud broker to the Cloud Connectors. If any health checks fail, Cloud Connectors transitions to the Pending HA state. When in this state, a comprehensive health check is initiated to determine the next course of action. The connectors interact with other connectors in the zone to determine their health status.

- The decision to move from Pending HA to Initial HA is based on the health status of all the connectors in a given zone. If the health checks are successful, the connectors/controllers transition back to the Working Normally state. Alternatively, if the health checks continue to fail, the connectors/controllers transition to the Initial HA state.

- During the Initial HA state, the LHC broker on the elected connector takes over brokering responsibilities. All VDAs in the current zone that were registered before will now register with the LHC broker on the Cloud Connector. It can take up to 5 minutes for all VDAs to re-register with the HA Service. At the end of Initial HA, health checks are initiated. If all health checks succeed, the state transitions to Pending Recovery, otherwise the state transitions to Extended HA.

- Health checks continue to occur during the Extended HA period. When health checks succeed during the Extended HA, the state transitions to Pending Recovery. There is no maximum time duration for a connector to remain in the Extended HA state.

- Pending Recovery serves as a buffer period to ensure that services are fully healthy before transitioning out of LHC mode. If any of the health checks fail during Pending Recovery, the state transitions back to Extended HA.

- If all the health checks succeed during the entirety of the 10-minute Pending Recovery period, then the state transitions to Working Normally. With this transition, LHC mode ends, and all the VDAs in the zone that were registered with the LHC broker now re-register with the Cloud broker. This re-registration can again take up to 5 minutes.

Important considerations during LHC mode

During LHC mode, consider the following impacts:

| Aspect | Impact During LHC Mode |

|---|---|

| Studio Access | Might be inaccessible, depending on the nature of the disruption. VDAs in zones operating in LHC mode displays as Unregistered in Studio because they are registered with the LHC broker. |

| Remote PowerShell SDK Access

|

Limited access.

Set SDK Authentication: Run Get-XDAuthentication -ProfileName WindowsCurrentUser to prevent the SDK proxy from redirecting cmdlet calls. After making these changes, you can use all Get-Broker cmdlets.

Note: Include the -AdminAddress localhost:89 parameter in the initial cmdlet call.

Example: Get-BrokerMachine -AdminAddress localhost:89

|

| Monitoring Data | Monitor functions don’t show activity from when LHC mode is active. A subset of monitoring data is available in the Local Host Cache dashboard of the trends page in Monitor. |

| Hypervisor Credentials | Cannot be obtained from Host Service. Machines in an unknown power state, no power operations possible. Powered-on VMs usable for connections. |

| Assigned Machines | Usable only if assigned during normal operations. New assignments are impossible in LHC mode. |

| Remote PC Access Machines | Automatic enrollment and configuration not supported. Machines enrolled and configured during normal operation are usable. |

| Server-Hosted Application and Desktops Session Limits | Users might use more sessions than their configured session limits, if the resources are in different zones. |

| Zone Behavior | Each zone acts independently. If the StoreFront advanced health check feature is enabled, StoreFront is able to route launch requests to the appropriate zone during LHC mode and avoid session launch failures. |

| Scheduled VDA Restarts | If LHC mode enters before a scheduled restart begins for VDAs in a delivery group, the restarts begin when LHC mode exits and normal brokering operations resume, potentially causing unexpected reboots when the zone leaves LHC mode. For more information and configurations that can alter this behavior, see Scheduled restarts delayed due to database outage. |

| Zone Preference | Zone preference configurations are not considered for session launch. |

| Tag Restrictions | For Delivery Groups with VDAs in multiple zones, tag restrictions can cause launch failures if tagged VDAs are not present in all zones. |

Note:

The use of

$XDSDKAuthfor SDK authentication is deprecated starting June 2025. For details, see Deprecation.

Application and desktop support

LHC supports the following types of VDAs and delivery models:

| VDA type | Delivery model | VDA availability during LHC mode |

|---|---|---|

| Multi-session OS | Applications and desktops | Always available. |

| Single-session OS static (assigned) | Desktops | Always available. |

| Power-managed single-session OS random (pooled)

|

Desktops

|

Available for only one session by default.

You can configure them to be always available for new sessions during LHC. For more information, see Enable using Studio and Enable using PowerShell. Important: Enabling access to power-managed single-session pooled machines can cause data and changes from previous user sessions being present in subsequent sessions. |

Note:

Enabling access to power-managed desktop VDAs in pooled delivery groups doesn’t affect how the configured

ShutdownDesktopsAfterUseproperty works during normal operations. When access to these desktops during LHC is enabled, VDAs don’t automatically restart after returning to normal brokering operations. Power-managed desktop VDAs in pooled delivery groups can retain data from previous sessions until the VDAs restart. A VDA restart can occur when a user logs off the VDA during non-LHC operations or when administrators restart the VDA.

Enable LHC for power-managed single-session OS pooled VDAs using Studio

Using Studio, you can make those machines available for new connections during LHC mode on a per-delivery group basis:

- To enable this feature during delivery group creation, see Create delivery groups.

- To enable this feature for an existing delivery group, see Manage delivery groups

Note:

This setting is available in Studio only for pooled desktop delivery groups that deliver power-managed VDAs.

Enable LHC for power-managed single-session OS pooled VDAs using PowerShell

To enable LHC for VDAs in a specific delivery group, follow these steps:

-

Enable this feature at the site level by running this command:

Set-BrokerSite -ReuseMachinesWithoutShutdownInOutageAllowed $true <!--NeedCopy--> -

Enable LHC for a delivery group by running this command with the delivery group name specified:

Set-BrokerDesktopGroup -Name "name" -ReuseMachinesWithoutShutdownInOutage $true <!--NeedCopy-->

To change the default LHC availability for newly created pooled delivery groups with power managed VDAs, run the following command:

Set-BrokerSite -DefaultReuseMachinesWithoutShutdownInOutage $true

<!--NeedCopy-->

Note:

Changing the default does not change the setting for existing Delivery Groups and only affects Delivery Groups created using PowerShell.

StoreFront configuration

If using an on-premises StoreFront deployment, review the following:

- If using a load balancing virtual server, configure the virtual server to monitor Connectors based on brokering capabilities (example, use the built-in CITRIX-XD-DDC monitor on a NetScaler® for connector load balancing).

- Include all Cloud Connectors within a single Cloud tenant as a single resource feed in StoreFront.

- View NetScaler Gateway configurations in StoreFront and ensure that all Connectors are listed as STA servers. Review NetScaler appliances and ensure all STAs listed in StoreFront are in the same format in the NetScaler Gateway virtual server. STA service health can also be monitored in the Gateway virtual server.

- Add all connectors to the resource feed in StoreFront and ensure that StoreFront can communicate with all Cloud Connectors over the port designated in the resource feed.

Note:

For customers with many connectors, it may be beneficial to configure a load-balancing virtual server for each zone to reduce management overhead and simplify troubleshooting. See Citrix TIPs: Integrating Citrix Virtual Apps and Desktops service and StoreFront for more information.

Validate Local Host Cache working

To verify that LHC is set up and working correctly:

- Ensure that synchronization imports complete successfully. Check the event logs for more information on how to monitor LHC synchronizations.

- Ensure that the Local Host Cache database was created on each Cloud Connector. This confirms that the High Availability Service can take over, if needed.

- On the Cloud Connector server, browse to

c:\Windows\ServiceProfiles\NetworkService. - Verify that

HaDatabaseName.mdfandHaDatabaseName_log.ldfare created.

- On the Cloud Connector server, browse to

- Force LHC mode on all Cloud Connectors in the zone: After you’ve verified that Local Host Cache works, remember to place all the Cloud Connectors back into normal mode. See Local Host Cache states for more information regarding the time it will take to leave LHC mode.

Monitor Local Host Cache

Event logs

Event logs provide critical information regarding the health and performance of LHC.

Config Synchronizer Service

During normal operations, the following events can occur when the CSS imports the configuration data into the LHC database using the LHC broker.

| Event ID | Description |

|---|---|

| 503 | Indicates that the CSS received an updated configuration. This event occurs each time an updated configuration is received from Citrix Cloud. This indicates the start of the synchronization process. |

| 504 | Indicates that the CSS imported an updated configuration. The configuration import completed successfully. |

| 505 | Indicates that the CSS failed an import. The configuration import did not complete successfully. If a previous successful configuration is available, it is used if LHC mode is entered. However, it will be out-of-date from the current configuration. If there is no previous configuration available, the service cannot participate in session brokering during LHC mode. In this case, see the Troubleshoot section, and contact Citrix Support. |

| 507 | Indicates that the CSS abandoned an import because the system is in LHC mode and the LHC broker is being used for brokering. The service received a new configuration, but the import was abandoned because LHC mode was entered. This is expected behavior. |

| 510 | Indicates that no CSS configuration data received from primary Configuration Service. |

| 517 | Indicates that there was a problem communicating with the primary Broker Service. |

| 518 | Indicates that the CSS script aborted because the LHC broker (High Availability Service) is not running. |

High Availability Service

This service is also known as the LHC broker.

| Event ID | Description |

|---|---|

| 3502 | Indicates that an outage occurred and the LHC broker is performing broker operations. |

| 3503 | Indicates that an outage was resolved and normal operations have resumed. |

| 3504 | Indicates the LHC broker that is elected and other LHC brokers involved in the election. |

| 3507 | Indicates that LHC mode is active on the elected LHC broker. Contains a summary of the outage including outage duration, VDA registration, and session information. |

| 3508 | Indicates that LHC is no longer active on the elected LHC broker and normal operations have been restored. Contains a summary of the outage including outage duration, number of machines that registered during the LHC event, and number of successful launches during LHC mode. |

| 3509 | Indicates that LHC is active on the non-elected LHC broker. Contains an outage duration every 2 minutes and indicates the elected LHC broker. |

| 3510 | Indicates that the LHC is no longer active on the non-elected LHC broker. Contains the outage duration and indicates the elected LHC broker. |

Remote Broker Provider

This service acts as a proxy between Citrix Cloud and your VDAs and Cloud Connectors.

| Event ID | Description |

|---|---|

| 3001 | Checks if Cloud Connectors must enter LHC mode. Occurs after a single failed health check of the Cloud Connector. If another check fails after 90 seconds, the Cloud Connector transitions into LHC mode. |

| 3002 | Indicates that the Cloud Connector cannot enter LHC mode. The reason is included in the event information. |

| 3003

|

Indicates that the Cloud Connector is transitioning through LHC mode states. The event provides details about the following

|

Note:

The 3001 events on your Cloud Connectors periodically throughout the day are typically not a cause for concern. However, if they’re occurring multiple times an hour, then it indicates a network issue and might warrant further investigation.

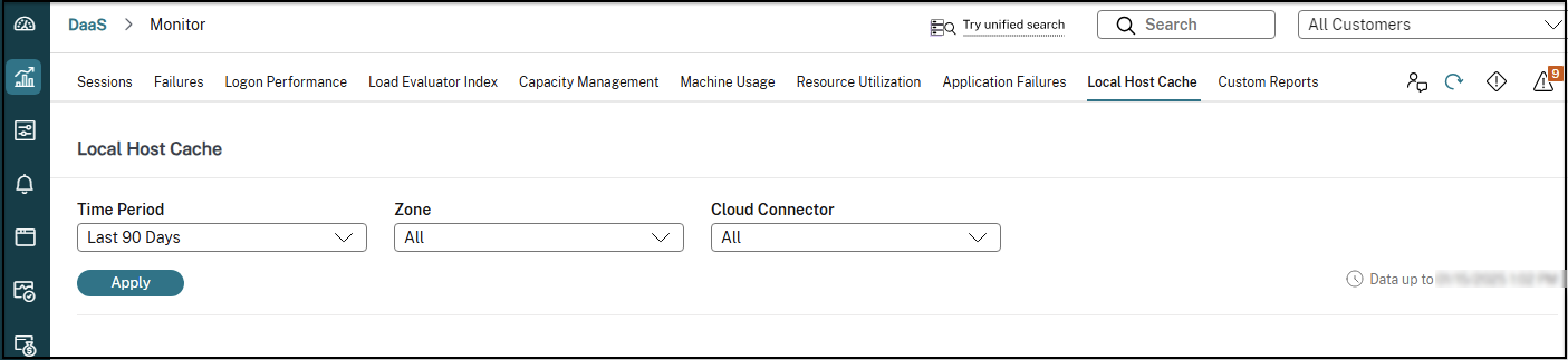

Citrix Monitor

Citrix Monitor contains centralized information regarding LHC mode entries and performance for the different Zones in your environment.

See Monitor historical trends across a site for more information.

Force Local Host Cache mode

You might want to deliberately force Local Host Cache mode in the following scenarios:

- If your network is going up and down repeatedly: Forcing LHC until the network issues are resolved prevents continuous transition between normal and LHC modes (and the resulting frequent VDA registration storms).

- To test a disaster recovery plan.

- To help ensure that Local Host Cache is working correctly.

To force LHC mode:

-

Edit the registry of each Cloud Connector server in

HKLM\Software\Citrix\DesktopServer\LHC: Create and setOutageModeForcedas REG_DWORD to1.- Setting the value to

1instructs the LHC broker to enter LHC mode, regardless of the state of the connection to Citrix Cloud. - Setting the value to

0instructs the LHC broker to exit LHC mode and resume normal operations.

- Setting the value to

To verify events:

- Monitor the

Current_HighAvailabilityServicelog file inC:\ProgramData\Citrix\workspaceCloud\Logs\Plugins\HighAvailabilityService.

Troubleshoot synchronization import failures

When a synchronization import to the LHC database fails and a 505 event is logged, you can use the following troubleshooting tools:

- CDF Tracing:

- Enable tracing for the

ConfigSyncServerandBrokerLHCmodules. - Use with other broker modules to identify the problem.

- Enable tracing for the

- CSS Trace Report:

-

Generates a detailed report that pinpoints the object causing the synchronization failure.

Note:

Enabling this report can impact synchronization speed. Disable it when not actively troubleshooting.

To enable and generate a CSS Trace Report:

-

Enable reporting: Run the following command:

New-ItemProperty -Path HKLM:\SOFTWARE\Citrix\DesktopServer\LHC -Name EnableCssTraceMode -PropertyType DWORD -Value 1 <!--NeedCopy--> -

Locate the report: The HTML report is generated at

C:\Windows\ServiceProfiles\NetworkService\AppData\Local\Temp\CitrixBrokerConfigSyncReport.html. -

Disable reporting: After the report is generated, run the following command to disable the reporting feature:

Set-ItemProperty -Path HKLM:\SOFTWARE\Citrix\DesktopServer\LHC -Name EnableCssTraceMode -Value 0 <!--NeedCopy-->

-

Local Host Cache PowerShell commands

You can manage Local Host Cache on your Cloud Connectors using PowerShell commands.

The PowerShell module is at the following location on the Cloud Connectors:

C:\Program Files\Citrix\Broker\Service\ControlScripts

Important:

Run this module only on the Cloud Connectors.

Import PowerShell module

To import the module, run the following on your Cloud Connector:

Import-Module "C:\Program Files\Citrix\Broker\Service\ControlScripts\HighAvailabilityServiceControl.psm1

<!--NeedCopy-->

PowerShell commands to manage LHC

The following cmdlets help you to activate and manage the LHC mode on the Cloud Connectors.

| Cmdlets | Function |

|---|---|

Enable-LhcForcedOutageMode |

Place the Broker in LHC mode. Local Host Cache database files must have been successfully created by the CSS for Enable-LhcForcedOutageMode to function properly. This cmdlet only forces LHC on the Cloud Connector that it was run on. For LHC to become active, this cmdlet must be run on all Cloud Connectors within the zone. |

Disable-LhcForcedOutageMode |

Takes the Broker out of the LHC mode. This cmdlet only disables LHC mode on the Cloud Connector that it was run on. Disable-LhcForcedOutageMode must be run on all Cloud Connectors within the zone. |

Set-LhcConfigSyncIntervalOverride |

Sets the interval at which CSS checks for configuration changes within the Citrix DaaS™ site. The time interval can range from 60 seconds(one minute) to 3600 seconds(one hour). This setting only applies to the Cloud Connector on which it was run. For consistency across the Cloud Connectors, consider running this cmdlet on each Cloud Connector. For example: Set-LhcConfigSyncIntervalOverride -Seconds 1200

|

Clear-LhcConfigSyncIntervalOverride |

Sets the interval at which the CSS checks for configuration changes within the Citrix DaaS site to the default value of 300 seconds (five minutes). This setting only applies to the Cloud Connector on which it was run. For consistency across the Cloud Connectors, consider running this cmdlet on each Cloud Connector. |

Enable-LhcHighAvailabilitySDK |

Enables access to all the Get-Broker* cmdlet within the Cloud Connector that it was run on. |

Disable-LhcHighAvailabilitySDK |

Disables access to the Broker PowerShell commands within the Cloud Connector that it was run on. |

Note:

- Use port 89 when running the

Get-Broker*cmdlets on the Cloud Connector. For example:

Get-BrokerMachine -AdminAddress localhost:89- When not in LHC mode, the LHC Broker on the Cloud Connector only holds configuration information.

- During LHC mode, the LHC Broker holds the following information:

- Resource states

- Session details

- VDA registrations

- Configuration information

More information

-

See Scale and size considerations for Local Host Cache for information about:

- Testing methodologies and results

- RAM size considerations

- CPU core and socket configuration considerations

- Storage considerations