Local Host Cache

To ensure that the Citrix Virtual Apps and Desktops site database is always available, Citrix recommends starting with a fault-tolerant SQL Server deployment, by following high availability best practices from Microsoft. (For supported SQL Server high availability features, see Databases.) However, network issues and interruptions can result in users not being able to connect to their applications or desktops.

The Local Host Cache feature allows connection brokering operations in a site to continue when an outage occurs. An outage occurs when the connection between a Delivery Controller™ and the site database fails in an on-premises Citrix® environment. Local Host Cache engages when the site database is inaccessible for 90 seconds.

As of XenApp and XenDesktop 7.16, the connection leasing feature (a predecessor high availability feature in earlier releases) was removed from the product, and is no longer available.

Data content

Local Host Cache includes the following information, which is a subset of the information in the main database:

- Identities of users and groups who are assigned rights to resources published from the site.

- Identities of users who are currently using, or who have recently used, published resources from the site.

- Identities of VDA machines (including Remote PC Access machines) configured in the site.

- Identities (names and IP addresses) of client Citrix Receiver™ machines being actively used to connect to published resources.

It also contains information for currently active connections that were established while the main database was unavailable:

- Results of any client machine endpoint analysis performed by Citrix Receiver.

- Identities of infrastructure machines (such as NetScaler Gateway and StoreFront™ servers) involved with the site.

- Dates and times and types of recent activity by users.

How it works

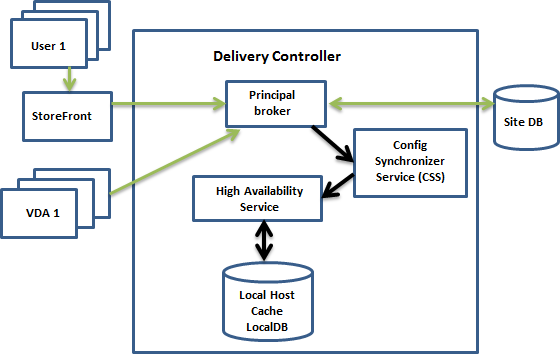

The following graphic illustrates the Local Host Cache components and communication paths during normal operations.

During normal operations

- The principal broker (Citrix Broker Service) on a Controller accepts connection requests from StoreFront. The broker communicates with the site database to connect users with VDAs that are registered with the Controller.

- The Citrix Config Synchronizer Service (CSS) checks with the broker approximately every 5 minutes to see if any changes were made. Those changes can be administrator-initiated (such as changing a delivery group property) or system actions (such as machine assignments).

-

If a configuration change occurred since the previous check, the CSS synchronizes (copies) information to a secondary broker on the Controller. (The secondary broker is also known as the High Availability Service.)

All configuration data is copied, not just items that changed since the previous check. The CSS imports the configuration data into a Microsoft SQL Server Express LocalDB database on the Controller. This database is referred to as the Local Host Cache database. The CSS ensures that the information in the Local Host Cache database matches the information in the site database. The Local Host Cache database is re-created each time synchronization occurs.

Microsoft SQL Server Express LocalDB (used by the Local Host Cache database) is installed automatically when you install a Controller. (You can prohibit this installed when installing a Controller from the command line.) The Local Host Cache database cannot be shared across Controllers. You do not need to back up the Local Host Cache database. It is recreated every time a configuration change is detected.

- If no changes have occurred since the last check, no data is copied.

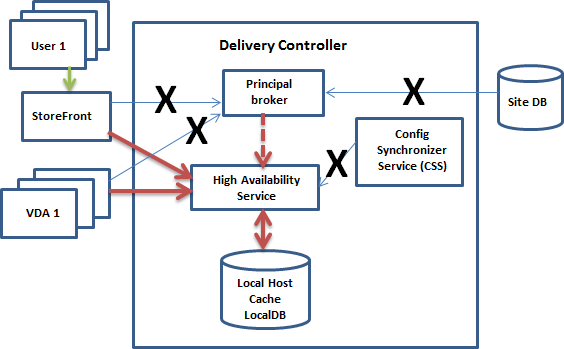

The following graphic illustrates the changes in communications paths if the principal broker loses contact with the site database (an outage begins).

During an outage

When an outage begins:

- The secondary broker starts listening for and processing connection requests.

- When the outage begins, the secondary broker does not have current VDA registration data, but when a VDA communicates with it, a registration process is triggered. During that process, the secondary broker also gets current session information about that VDA.

- While the secondary broker is handling connections, the Brokering Principal continues to monitor the connection. When the connection is restored, the Brokering Principal instructs the secondary broker to stop listening for connection information, and the Brokering Principal resumes brokering operations. The next time a VDA communicates with the Brokering Principal, a registration process is triggered. The secondary broker removes any remaining VDA registrations from the previous outage. The CSS resumes synchronizing information when it learns that configuration changes have occurred in the deployment.

In the unlikely event that an outage begins during a synchronization, the current import is discarded and the last known configuration is used.

The event log provides information about synchronizations and outages.

There is no time limit imposed for operating in outage mode.

The transition between normal and outage mode does not affect existing sessions. It affects only the launching of new sessions.

You can also intentionally trigger an outage. See Force an outage for details about why and how to do this.

Sites with multiple Controllers

Among its other tasks, the CSS routinely provides the secondary broker with information about all Controllers in the zone. (If your deployment does not contain multiple zones, this action affects all Controllers in the site.) Having that information, each secondary broker knows about all peer secondary brokers running on other Controllers in the zone.

The secondary brokers communicate with each other on a separate channel. Those brokers use an alphabetical list of FQDN names of the machines they’re running on to determine (elect) which secondary broker will be broker operations in the zone if an outage occurs. During the outage, all VDAs register with the elected secondary broker. The non-elected secondary brokers in the zone actively reject incoming connection and VDA registration requests.

If an elected secondary broker fails during an outage, another secondary broker is elected to take over, and VDAs register with the newly elected secondary broker.

During an outage, if a Controller is restarted:

- If that Controller is not the elected broker, the restart has no impact.

- If that Controller is the elected broker, a different Controller is elected, causing VDAs to register. After the restarted Controller powers on, it automatically takes over brokering, which causes VDAs to register again. In this scenario, performance can be affected during the registrations.

If you power off a Controller during normal operations and then power it on during an outage, Local Host Cache cannot be used on that Controller if it is elected as the broker.

The event logs provide information about elections.

What is unavailable during an outage, and other differences

There is no time limit imposed for operating in outage mode. However, Citrix recommends restoring connectivity as quickly as possible.

During an outage:

- You cannot use Studio.

-

You have limited access to the PowerShell SDK.

- You must first:

- Add a registry key

EnableCssTestModewith a value of 1:New-ItemProperty -Path HKLM:\SOFTWARE\Citrix\DesktopServer\LHC -Name EnableCssTestMode -PropertyType DWORD -Value 1 - Use port 89:

Get-BrokerMachine -AdminAddress localhost:89 | Select MachineName, ControllerDNSName, DesktopGroupName, RegistrationState

- Add a registry key

- After running those commands, you can access:

- All

Get-Broker*cmdlets.

- All

- You must first:

- Hypervisor credentials cannot be obtained from the Host Service. All machines are in the unknown power state, and no power operations can be issued. However, VMs on the host that are powered-on can be used for connection requests.

- An assigned machine can be used only if the assignment occurred during normal operations. New assignments cannot be made during an outage.

- Automatic enrollment and configuration of Remote PC Access machines is not possible. However, machines that were enrolled and configured during normal operation are usable.

- Server-hosted applications and desktop users might use more sessions than their configured session limits, if the resources are in different zones.

- Users can launch applications and desktops only from registered VDAs in the zone containing the currently active/elected secondary broker. Launches across zones (from a secondary broker in one zone to a VDA in a different zone) are not supported during an outage.

- If a site database outage occurs before a scheduled restart begins for VDAs in a delivery group, the restarts begin when the outage ends. This can have unintended results. For more information, see Scheduled restarts delayed due to database outage.

- Zone preference cannot be configured. If configured, preferences are not considered for session launch.

- Tag restrictions where tags are used to designate zones are not supported for session launches. When such tag restrictions are configured, and a StoreFront store’s advanced health check option is enabled, sessions might intermittently fail to launch.

Application and desktop support

LHC supports the following types of VDAs and delivery models:

| VDA type | Delivery model | VDA availability during LHC events |

|---|---|---|

| Multi-session OS | Applications and desktops | Always available. |

| Single-session OS static (assigned) | Desktops | Always available. |

| Power-managed single-session OS random (pooled)

|

Desktops

|

Not available by default. All session launch attempts to power-managed VDAs in pooled delivery groups will fail by default.

You can make them available for new connections during LHC events. For more information, see Enable using Web Studio and Enable using PowerShell. Important: Enabling access to power-managed single-session pooled machines can cause data and changes from previous user sessions being present in subsequent sessions. |

Note:

Enabling access to power-managed desktop VDAs in pooled delivery groups doesn’t affect how the configured

ShutdownDesktopsAfterUseproperty works during normal operations. When access to these desktops during LHC is enabled, VDAs don’t automatically restart after the LHC event is complete. Power-managed desktop VDAs in pooled delivery groups can retain data from previous sessions until the VDAs restart. A VDA restart can occur when a user logs off the VDA during non-LHC operations or when administrators restart the VDA.

Enable LHC for power-managed single-session OS pooled VDAs using Web Studio

Using Web Studio, you can make those machines available for new connections during LHC events on a per-delivery group basis:

- To enable this feature during delivery group creation, see Create delivery groups.

- To enable this feature for an existing delivery group, see Manage delivery groups.

Note:

This setting is available in Web Studio only for pooled desktop delivery groups that deliver power-managed VDAs.

Enable LHC for power-managed single-session OS pooled VDAs using PowerShell

To enable LHC for VDAs in a specific delivery group, follow these steps:

-

Enable this feature at the site level by running this command:

Set-BrokerSite -ReuseMachinesWithoutShutdownInOutageAllowed $true -

Enable LHC for a delivery group by running this command with the delivery group name specified:

Set-BrokerDesktopGroup -Name "name" -ReuseMachinesWithoutShutdownInOutage $true

To change the default LHC availability for newly created pooled delivery groups with power managed VDAs, run the following command:

Set-BrokerSite -DefaultReuseMachinesWithoutShutdownInOutage $true

RAM size considerations

The LocalDB service can use approximately 1.2 GB of RAM (up to 1 GB for the database cache, plus 200 MB for running SQL Server Express LocalDB). The secondary broker can use up to 1 GB of RAM if an outage lasts for an extended interval with many logons occurring (for example, 12 hours with 10K users). These memory requirements are in addition to the normal RAM requirements for the Controller, so you might need to increase the total amount of RAM capacity.

If you use a SQL Server Express installation for the site database, the server will have two sqlserver.exe processes.

CPU core and socket configuration considerations

A Controller’s CPU configuration, particularly the number of cores available to the SQL Server Express LocalDB, directly affects Local Host Cache performance, even more than memory allocation. This CPU overhead is observed only during the outage period when the database is unreachable and the secondary broker is active.

While LocalDB can use multiple cores (up to 4), it’s limited to only a single socket. Adding more sockets will not improve the performance (for example, having 4 sockets with 1 core each). Instead, Citrix recommends using multiple sockets with multiple cores. In Citrix testing, a 2x3 (2 sockets, 3 cores) configuration provided better performance than 4x1 and 6x1 configurations.

Storage considerations

As users access resources during an outage, the LocalDB grows. For example, during a logon/logoff test running at 10 logons per second, the database grew by 1 MB every 2-3 minutes. When normal operation resumes, the local database is recreated and the space is returned. However, sufficient space must be available on the drive where the LocalDB is installed to allow for the database growth during an outage. Local Host Cache also incurs more I/O during an outage: approximately 3 MB of writes per second, with several hundred thousand reads.

Performance considerations

During an outage, one secondary broker handles all the connections, so in sites (or zones) that load balance among multiple Controllers during normal operations, the elected secondary broker might need to handle many more requests than normal during an outage. Therefore, CPU demands will be higher. Every secondary broker in the site (zone) must be able to handle the additional load imposed by the Local Host Cache database and all the affected VDAs, because the secondary broker elected during an outage can change.

VDI limits:

- In a single-zone VDI deployment, up to 10,000 VDAs can be handled effectively during an outage.

- In a multi-zone VDI deployment, up to 10,000 VDAs in each zone can be handled effectively during an outage, to a maximum of 40,000 VDAs in the site. For example, each of the following sites can be handled effectively during an outage:

- A site with four zones, each containing 10,000 VDAs.

- A site with seven zones, one containing 10,000 VDAs, and six containing 5,000 VDAs each.

During an outage, load management within the site can be affected. Load evaluators (and especially, session count rules) can be exceeded.

During the time it takes all VDAs to register with a secondary broker, that service might not have complete information about current sessions. So, a user connection request during that interval can result in a new session being launched, even though reconnection to an existing session was possible. This interval (while the “new” secondary broker acquires session information from all VDAs during re-registration) is unavoidable. Sessions that are connected when an outage starts are not impacted during the transition interval, but new sessions and session reconnections might be.

This interval occurs whenever VDAs must register:

- An outage starts: When migrating from a principal broker to a secondary broker.

- Secondary broker failure during an outage: When migrating from a secondary broker that failed to a newly elected secondary broker.

- Recovery from an outage: When normal operations resume, and the principal broker resumes control.

You can decrease the interval by lowering the Citrix Broker Protocol’s HeartbeatPeriodMs registry value (default = 600000 ms, which is 10 minutes). This heartbeat value is double the interval the VDA uses for pings, so the default value results in a ping every 5 minutes.

For example, the following command changes the heartbeat to five minutes (300000 milliseconds), which results in a ping every 2.5 minutes:

New-ItemProperty -Path HKLM:\SOFTWARE\Citrix\DesktopServer -Name HeartbeatPeriodMs -PropertyType DWORD –Value 300000

Use caution when changing the heartbeat value. Increasing the frequency results in greater load on the Controllers during both normal and outage modes.

The interval cannot be eliminated entirely, no matter how quickly the VDAs register.

The time it takes to synchronize between secondary brokers increases with the number of objects (such as VDAs, applications, groups). For example, synchronizing 5000 VDAs might take 10 minutes or more to complete.

Differences from XenApp 6.x releases

Although this Local Host Cache implementation shares the name of the Local Host Cache feature in XenApp 6.x and earlier XenApp releases, there are significant improvements. This implementation is more robust and immune to corruption. Maintenance requirements are minimized, such as eliminating the need for periodic dsmaint commands. This Local Host Cache is an entirely different implementation technically.

Manage Local Host Cache

For Local Host Cache to work correctly, the PowerShell execution policy on each Controller must be set to RemoteSigned, Unrestricted, or Bypass.

SQL Server Express LocalDB

The Microsoft SQL Server Express LocalDB software that Local Host Cache uses is installed automatically when you install a Controller or upgrade a Controller from a version earlier than 7.9. Only the secondary broker communicates with this database. You cannot use PowerShell cmdlets to change anything about this database. The LocalDB cannot be shared across Controllers.

The SQL Server Express LocalDB database software is installed regardless of whether Local Host Cache is enabled.

To prevent its installation, install or upgrade the Controller using the XenDesktopServerSetup.exe command, and include the /exclude "Local Host Cache Storage (LocalDB)" option. However, keep in mind that the Local Host Cache feature will not work without the database, and you cannot use a different database with the secondary broker.

Installation of this LocalDB database has no effect on whether you install SQL Server Express for use as the site database.

For information about replacing an earlier SQL Server Express LocalDB version with a newer version, see Replace SQL Server Express LocalDB.

Default settings after product installation and upgrade

During a new installation of Citrix Virtual Apps and Desktops (minimum version 7.16), Local Host Cache is enabled.

After an upgrade (to version 7.16 or later), Local Host Cache is enabled if there are fewer than 10,000 VDAs in the entire deployment.

Enable and disable Local Host Cache

-

To enable Local Host Cache, enter:

Set-BrokerSite -LocalHostCacheEnabled $trueTo determine whether Local Host Cache is enabled, enter

Get-BrokerSite. Check that theLocalHostCacheEnabledproperty isTrue. -

To disable Local Host Cache, enter:

Set-BrokerSite -LocalHostCacheEnabled $false

Remember: As of XenApp and XenDesktop 7.16, connection leasing (the feature that preceded Local Host Cache beginning with version 7.6) was removed from the product, and is no longer available.

Verify that Local Host Cache is working

To verify that Local Host Cache is set up and working correctly:

- Ensure that synchronization imports complete successfully. Check the event logs.

- Ensure that the SQL Server Express LocalDB database was created on each Delivery Controller. This confirms that the secondary broker can take over, if needed.

- On the Delivery Controller server, browse to

C:\Windows\ServiceProfiles\NetworkService. - Verify that

HaDatabaseName.mdfandHaDatabaseName_log.ldfare created.

- On the Delivery Controller server, browse to

- Force an outage on the Delivery Controllers. After you’ve verified that Local Host Cache works, remember to place all the Controllers back into normal mode. This can take approximately 15 minutes.

Event logs

Event logs indicate when synchronizations and outages occur. In event viewer logs, outage mode is referred to as HA mode.*

Config Synchronizer Service:

During normal operations, the following events can occur when the CSS imports the configuration data into the Local Host Cache database using the Local Host Cache broker.

- 503: The Citrix Config Sync Service received an updated configuration. This event indicates the start of the synchronization process.

- 504: The Citrix Config Sync Service imported an updated configuration. The configuration import completed successfully.

- 505: The Citrix Config Sync Service failed an import. The configuration import did not complete successfully. If a previous successful configuration is available, it is used if an outage occurs. However, it will be out-of-date from the current configuration. If there is no previous configuration available, the service cannot participate in session brokering during an outage. In this case, see the Troubleshoot section, and contact Citrix Support.

- 507: The Citrix Config Sync Service abandoned an import because the system is in outage mode and the Local Host Cache broker is being used for brokering. The service received a new configuration, but the import was abandoned because an outage occurred. This is expected behavior.

- 510: No Configuration Service configuration data received from primary Configuration Service.

- 517: There was a problem communicating with the primary Broker.

- 518: Config Sync script aborted because the secondary Broker (High Availability Service) is not running.

High Availability Service:

This service is also known as the Local Host Cache broker.

- 3502: An outage occurred and the Local Host Cache broker is performing brokering operations.

- 3503: An outage was resolved and normal operations have resumed.

- 3504: Indicates which Local Host Cache broker is elected, plus other Local Host Cache brokers involved in the election.

- 3507: Provides a status update of Local Host Cache every 2 minutes which indicates that Local Host Cache mode is active on the elected broker. Contains a summary of the outage including outage duration, VDA registration, and session information.

- 3508: Announces Local Host Cache is no longer active on the elected broker and normal operations have been restored. Contains a summary of the outage including outage duration, number of machines that registered during the Local Host Cache event, and number of successful launches during the LHC event.

- 3509: Notifies that Local Host Cache is active on the non-elected broker(s). Contains an outage duration every 2 minutes and indicates the elected broker.

- 3510: Announces Local Host Cache is no longer active on the non-elected broker(s). Contains the outage duration and indicates the elected broker.

Force an outage

You might want to deliberately force an outage.

- If your network is going up and down repeatedly. Forcing an outage until the network issues are resolved prevents continuous transition between normal and outage modes (and the resulting frequent VDA registration storms).

- To test a disaster recovery plan.

- To help ensure that Local Host Cache is working correctly.

- While replacing or servicing the site database server.

To force an outage, edit the registry of each server containing a Delivery Controller. In HKLM\Software\Citrix\DesktopServer\LHC, create and set OutageModeForced as REG_DWORD to 1. This setting instructs the Local Host Cache broker to enter outage mode, regardless of the state of the database. Setting the value to 0 takes the Local Host Cache broker out of outage mode.

To verify events, monitor the Current_HighAvailabilityService log file in C:\ProgramData\Citrix\WorkspaceCloud\Logs\Plugins\HighAvailabilityService.

Troubleshoot

Several troubleshooting tools are available when a synchronization import to the Local Host Cache database fails and a 505 event is posted.

CDF tracing: Contains options for the ConfigSyncServer and BrokerLHC modules. Those options, along with other broker modules, will likely identify the problem.

Report: If a synchronization import fails, you can generate a report. This report stops at the object causing the error. This report feature affects synchronization speed, so Citrix recommends disabling it when not in use.

To enable and produce a CSS trace report, enter the following command:

New-ItemProperty -Path HKLM:\SOFTWARE\Citrix\DesktopServer\LHC -Name EnableCssTraceMode -PropertyType DWORD -Value 1

The HTML report is posted at C:\Windows\ServiceProfiles\NetworkService\AppData\Local\Temp\CitrixBrokerConfigSyncReport.html.

After the report is generated, enter the following command to disable the reporting feature:

Set-ItemProperty -Path HKLM:\SOFTWARE\Citrix\DesktopServer\LHC -Name EnableCssTraceMode -Value 0

Export the broker configuration: Provides the exact configuration for debugging purposes.

Export-BrokerConfiguration | Out-File <file-pathname>

For example, Export-BrokerConfiguration | Out-File C:\\BrokerConfig.xml.

Local Host Cache PowerShell commands

You can manage Local Host Cache(LHC) on your Delivery Controllers using PowerShell commands.

The PowerShell module is located at the following location on the Delivery Controllers:

C:\Program Files\Citrix\Broker\Service\ControlScripts

Important:

Run this module only on the Delivery Controllers.

Import PowerShell module

To import the module, run the following on your Delivery Controller.

cd C:\Program Files\Citrix\Broker\Service\ControlScripts

Import-Module .\HighAvailabilityServiceControl.psm1

PowerShell commands to manage LHC

The following commands help you to activate and manage the LHC mode on the Delivery Controllers.

| Cmdlets | Function |

|---|---|

Enable-LhcForcedOutageMode |

Place the Broker in LHC mode. LHC database files must have been successfully created by the ConfigSync Service for Enable-LhcForcedOutageMode to function properly. This cmdlet only forces LHC on the Delivery Controller that it was run on. For LHC to become active, this command must be run on all the Delivery Controllers within the zone. |

Disable-LhcForcedOutageMode |

Takes the Broker out of the LHC mode. This cmdlet only disables LHC mode on the Delivery Controller that it was run on. Disable-LhcForcedOutageMode must be run on all the Delivery Controllers within the zone. |

Set-LhcConfigSyncIntervalOverride |

Sets the interval at which the Citrix Config Synchronizer Service (CSS) checks for configuration changes within the site. The time interval can range from 60 seconds(one minute) to 3600 seconds (one hour). This setting only applies to the Delivery Controller on which it was run. For consistency across Delivery Controllers, consider running this cmdlet on each Delivery Controller. For example: Set-LhcConfigSyncIntervalOverride -Seconds 1200

|

Clear-LhcConfigSyncIntervalOverride |

Sets the interval at which the Citrix Config Synchronizer Service (CSS) checks for configuration changes within the site to the default value of 300 seconds (five minutes). This setting only applies to the Delivery Controller on which it was run. For consistency across Delivery Controllers, consider running this cmdlet on each Delivery Controller. |

Enable-LhcHighAvailabilitySDK |

Enables access to all the Get-Broker* cmdlets within the Delivery Controller that it was run on. |

Disable-LhcHighAvailabilitySDK |

Disables access to the Broker cmdlets within the Delivery Controller that it was run on. |

Note:

- Use port 89 when running the

Get-Broker*cmdlets on the Delivery Controller. For example:

Get-BrokerMachine -AdminAddress localhost:89- When not in LHC mode, the LHC Broker on the Delivery Controller only holds configuration information.

- During LHC mode, the LHC Broker on the elected Delivery Controller holds the following information:

- Resource states

- Session details

- VDA registrations

- Configuration information